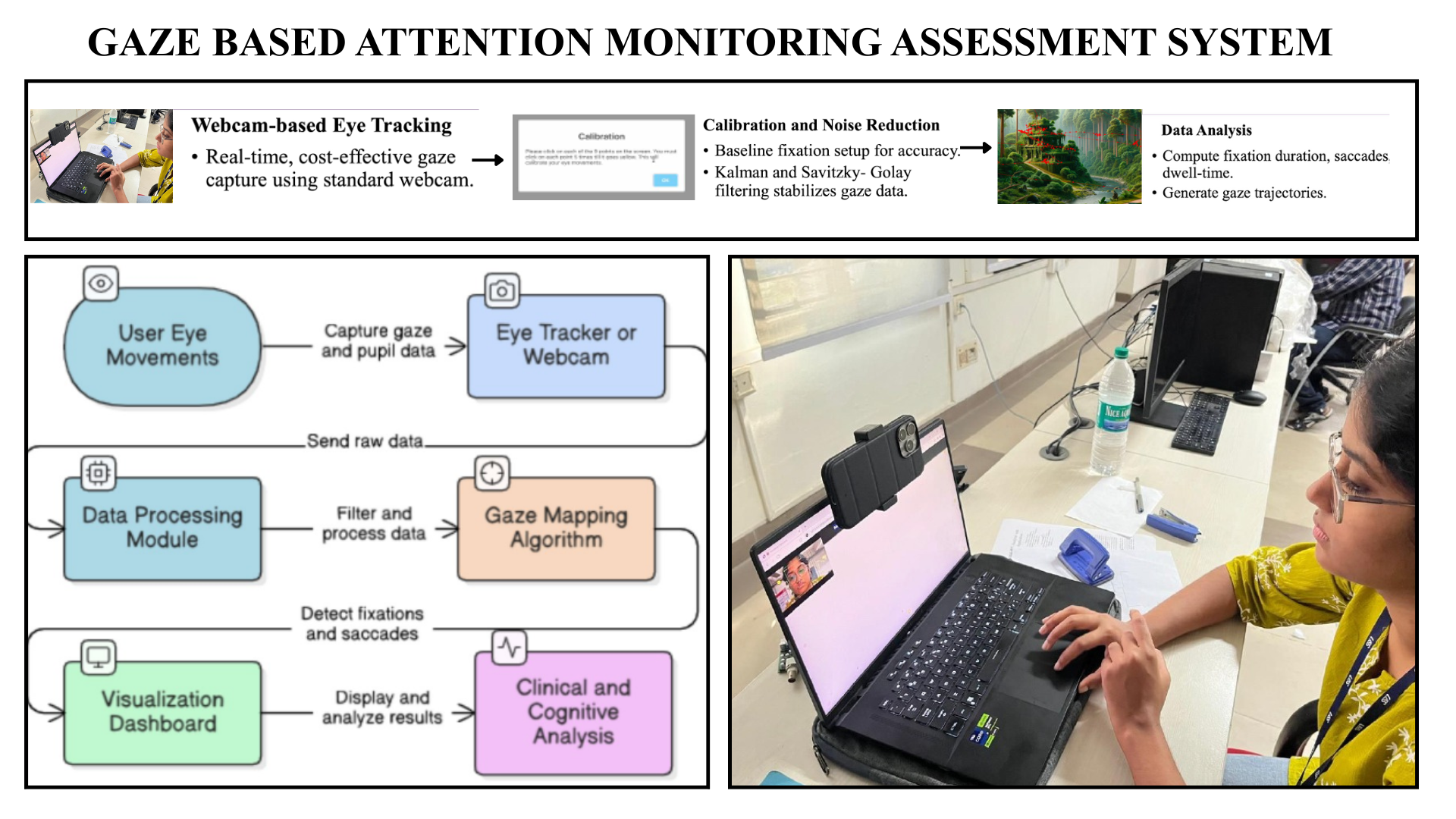

Team 1: Gaze Based Attention Monitoring Assessment System

Augustine Wisely Bezalel (Sri Sivasubramaniya Nadar College of Engineering)

This project uses real-time gaze-tracking technology where visual attention is monitored & analysed. WebGazer.js like technologies make the use of standard webcam possible and cost effective. This solution is for education, healthcare, & HCI. During calibration, participants fixate on points to produce baseline gaze data to ensure individuality & accuracy. Participants fixate on specific images with instructions. Integration of Kalman filtering minimizes noise and stabilized gaze data. Heat maps and visuals are generated that present the gaze intensity, assist in understanding trends. This project has made a step in enhancing human attention.

Intro SlidesPosterTeam 2: NeuroAgent: A Brain-Aware AI Agent Framework for Adaptive Human-Robot Interaction

Aditya Kumar (Indian Institute of Information Technology, Surat)

Traditional Human-Robot Interaction (HRI) systems often lack cognitive smoothness—the seamless alignment between human perceptual states and robotic behavior. This paper introduces NeuroAgent, a brain-aware AI framework designed to perceive, interpret, and adapt to a human partner’s cognitive and emotional state in real time. The architecture fuses multimodal neurophysiological and behavioral signals through three synergistic layers: (1) a Multimodal Sensing Layer that integrates electroencephalography (EEG) for attention, workload, and emotion analysis, electrodermal activity (EDA) for arousal, and behavioral cues such as facial micro-expressions and voice prosody; (2) an AI Cognition Layer, powered by a multi-agent reasoning system built upon Large Language Models (LLMs), where cognitive, behavioral, and conversational agents collaborate using a novel Cognitive Retrieval-Augmented Generation (RAG) pipeline to ground AI reasoning in the user’s live neuro-physiological context; and (3) an Embodied Output Layer, where decisions manifest through physical (ROS-based) or virtual (Unity-based) embodiments, enabling adaptive gestures, modulated speech tone, and dynamic task complexity adjustment.

Intro Slides

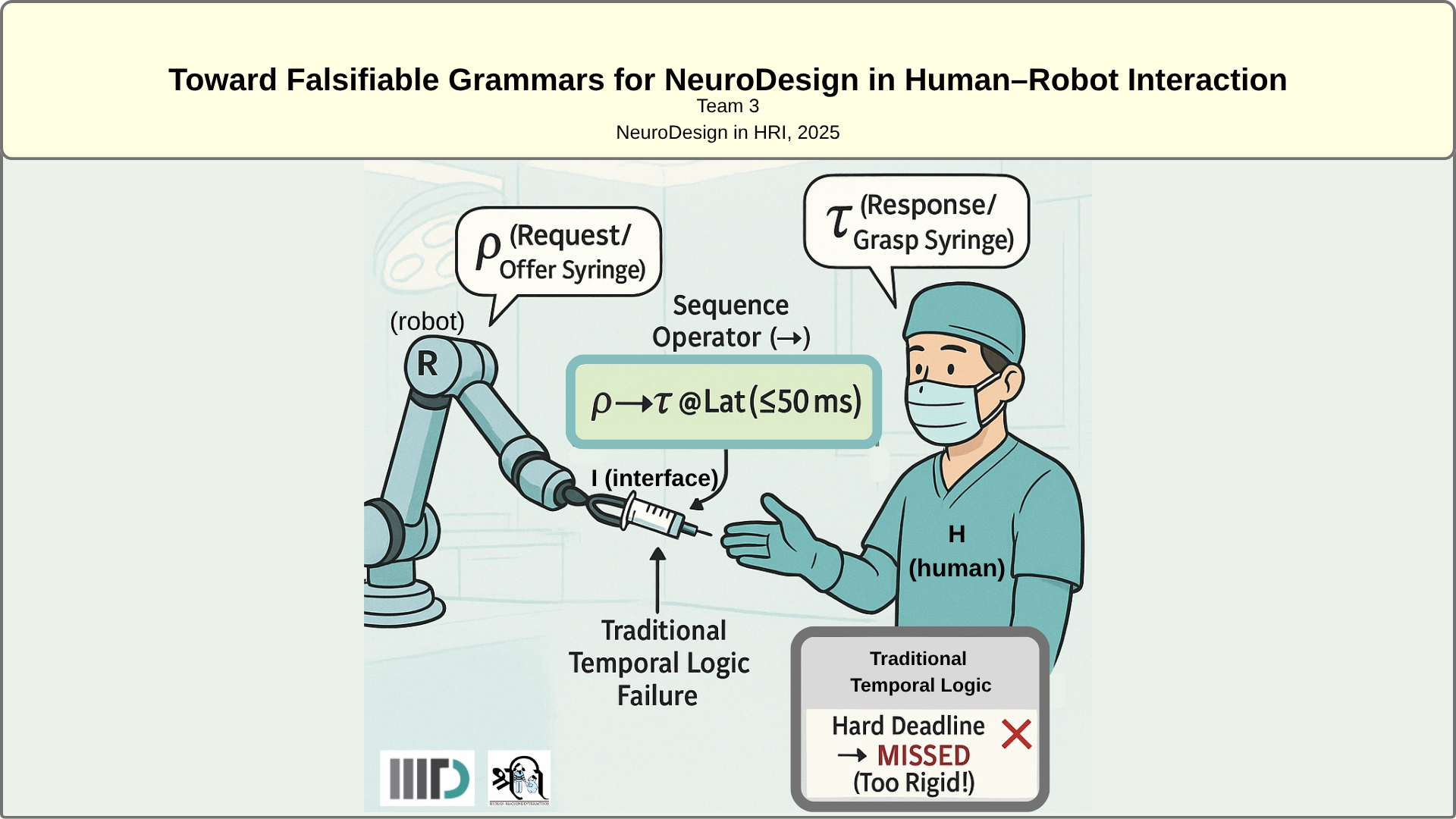

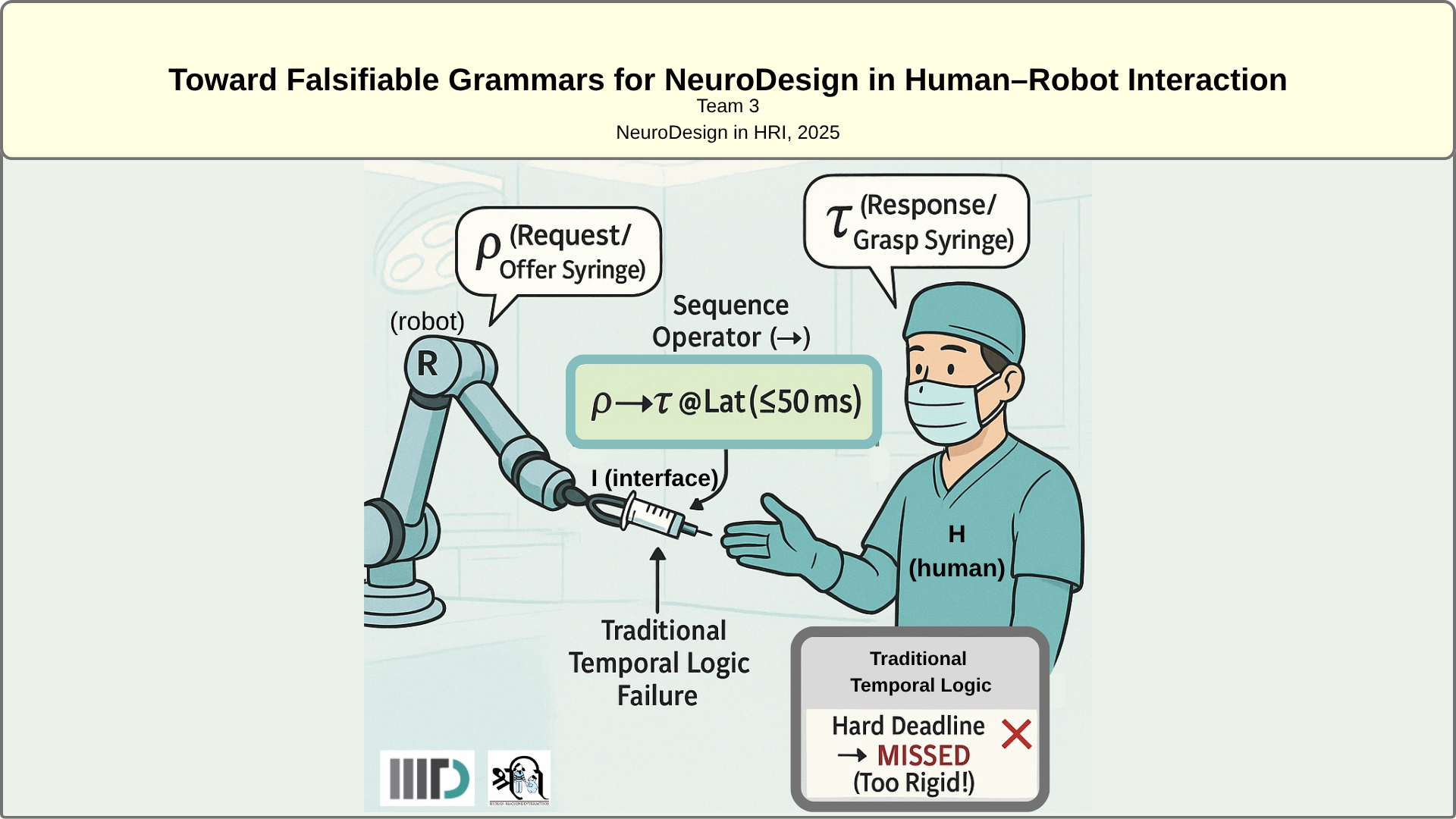

Team 3: Toward Falsifiable Grammars for NeuroDesign in HRI

Vinay Kumar Verma (Indraprastha Institute of Information Technology)

Current HRI and NeuroDesign frameworks lack a formal, verifiable basis for interaction success, detecting failures only post hoc. We introduce a minimal, falsifiable grammar to model neuro-physical coordination using primitives (signal, request, response) and temporal operators (sequence, repair). By binding these operators to measurable neuro-temporal "budgets," such as cognitive latency and sensorimotor synchrony, the grammar specifies when collaboration succeeds, fails, or must repair itself. This transforms HRI from descriptive analysis to a priori verification, enabling auditable, adaptive, and certifiable brain-body interfaces that align formal methods with neuro-cognitive fluidity.

Extended AbstractPoster

Team 4: VR Controlled Robot Arm: Bridging Virtual Reality and Real-World Robotics

Sushim Saini (Indian Institute of Technology Roorkee)

We developed a low-cost VR-controlled robotic arm that creates a real-time digital twin between the virtual and physical worlds. Movements made through the VR headset and controllers are mirrored instantly by the physical robotic arm, enabling intuitive and immersive human–robot interaction. The design focuses on accessibility, adaptability, and embodied control, using a 3D-printed structure and servo-based actuation. By blending VR and robotics, the system bridges human intention with robotic motion, demonstrating applications in assistive technology, remote operation, and education. Future development aims to integrate cognitive and sensory feedback for more natural, brain-aligned interaction.

Intro SlidesShort VideoPoster

Team 5: The Goldilocks Problem: The Smartphone Based Search for 'Just Right' Control in Human-Machine Systems

Tanisha Majumdar (Indian Institute of Technology Delhi (IIT-D))

We present HAMOT (Hand Motion Object Tracking), a smartphone-based sensorimotor testbed prototype with MATLAB GUI that employs information-theoretic benchmarking to optimize human-machine control paradigms. This platform maps wrist flexion/extension to cursor movements, systematically comparing position versus velocity control under perturbations mirroring adaptive device challenges: variable gain, noise, latency, and nonlinearity. Performance metrics reveal granular behavioral profiles of users - specifically, error correction strategies, cognitive flexibility, sensorimotor resilience - that accurately map users' cognitive states and motor intent for usage in human-robot collaboration context. HAMOT's accessible architecture enables transition from controlled laboratory experiments to interactive out-of-lab perception studies and real-world deployment in assistive robotics.

Intro SlidesPosterTeam 6: Neuroprosthetic Embodiment: From Neural Flux to Self Beyond

Hozan Mohammadi (National Brain Mapping Laboratory (NBML))

Human-machine integration remains a challenge due to the inability of robotic systems to intuitively align with individual intent, agency, and embodiment. This work proposes a neuroprosthetic and avatar-based feedback framework that translates stochastic neural and sensorimotor signals into coherent, hybrid selfhood experiences. By integrating neuroprosthetic control, multimodal sensory feedback, and immersive avatar representation, we investigate the emergence of adaptive, participatory embodiment in real-time human-robot interactions through the lens of NeuroDesign principles, optimizing cognitive load, emotion-aware interaction, and intuitive brain-centered control. This approach positions brain-centric experience as a driver of HRI innovation, leveraging closed-loop feedback to enhance agency, ownership, and presence, while laying a foundation for bio-compatible, market-ready neuroadaptive technologies.

Extended Abstract

Team 7: Combining SOFT and RIGID exoskeletons: adding pronosupination to AGREE

Noemi Sacco (Politecnico di Milano)

My thesis project encompasses the manufacturing and control design of a soft pneumatic exosuit for forearm pronation/supination, developed at the Soft NeuroBionics Lab of Scuola Superiore Sant’Anna in Pisa (R. Ferroni et al., 2025). The ultimate goal of the work is to integrate this module into an existing rigid upper-limb exoskeleton called AGREE (S. Dalla Gasperina et al., 2023), developed at Politecnico di Milano.

Intro SlidesTeam 8: Deep Learning-Based EEG Classification for Virtual Reality Mobile Robot Control in Dementia Patients Using DL

Youcef YAHI (University of Rome II – Tor Vergata)

This study presents a deep learning–based brain–computer interface (BCI) system designed to enable patients suffering from dementia to control a virtual reality (VR) mobile robot through electroencephalogram (EEG) signals. The proposed framework employs a hybrid Convolutional Neural Network combined with Bidirectional Long Short-Term Memory (CNN-BiLSTM) architecture to classify motor imagery EEG patterns associated with four directional commands: forward, backward, left, and right. EEG data were preprocessed to remove artifacts and segmented into epochs corresponding to each intended movement. The CNN layers effectively extracted spatial–spectral features from the EEG signals, while the BiLSTM layers captured the temporal dependencies critical for robust decoding of neural activity. The trained model achieved an average classification accuracy of 72.47%, demonstrating reliable translation of cognitive intentions into robot control commands within the VR environment. These results highlight the potential of hybrid deep learning architectures for restoring mobility and interaction capabilities in patients with cognitive impairments such as dementia, offering a promising pathway toward immersive neuro-rehabilitation and assistive robotic systems.

Extended Abstract.jpg)

Team 9: The Neuroevolution of Collaborative Decision-Making in Robotic Assistants

Joao Gaspar Oliveira Cunha (University of Minho)

How can robots learn to collaborate naturally with humans? This project explores how principles of Neuroevolution and Dynamic Field Theory can give rise to adaptive and interpretable control in robotic assistants. By evolving the neural architectures that govern perception, memory, and decision-making, our system enables robots to learn when to assist and when to act independently in shared tasks. In a human–robot packaging scenario, evolved controllers exhibit emergent collaboration, complementarity, and self-organization, without manual tuning. This work proposes a new generation of evolved neuroadaptive robotic partners capable of fluid, human-like collaboration shaped by the principles of the brain.

Short VideoPoster

Team 10: Toward a Gaze-Independent Brain-Computer Interface Using the Code-Modulated Visual Evoked Potentials

Radovan Vodila (Donders Institute, Radboud University)

Our project works toward developing a gaze-independent brain–computer interface (BCI) that enables communication without eye movement, crucial for paralyzed, and locked-in users who lost control over their eye movements. We employ noise-tagging of symbols and decode covert visuospatial attention from Electroencephalography (EEG). By extending visual speller BCIs beyond gaze-dependency, this work aims to create inclusive and effortless communication systems at the forefront of brain-based assistive technology, restoring interaction and connection for those who have lost all conventional means of expression.

Extended AbstractPosterTeam 11: Neuromorphic BCI for HRI: Leveraging Spiking Neural Networks, Reservoir Computing, and Memristive Hardware for Biologically Plausible and Low-Power Interaction

Denis Yamunaque (Universidad Complutense de Madrid)

Our project introduces a neuromorphic Brain-Computer Interface (BCI) based on reservoir computing (RC) and Spiking Neural Networks (SNN). This design emphasizes biological realism, leveraging the principles of RCs and SNNs. The key innovation lies in its potential for physical implementation using memristors, which significantly enhances energy efficiency and adaptability in the physical readout layer. This approach bridges the gap between biologically plausible neural processing and efficient hardware, in a search for advanced, low-power BCI applications in human-robot interaction.

Intro Slides

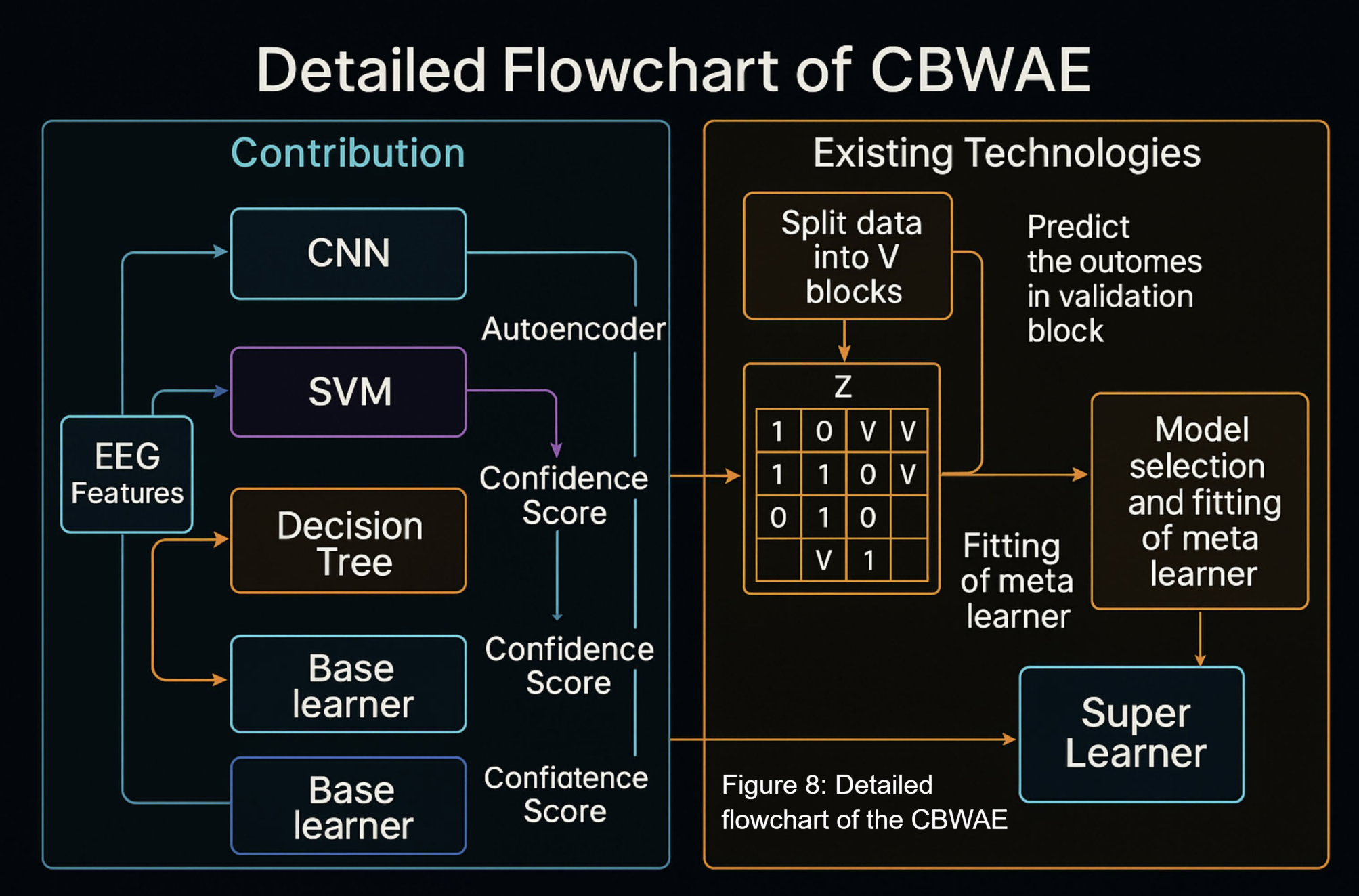

Team 12: Real-Time Assistive BCI: A Quantum-Optimized Neurointerface for Motor Intent Decoding

Mustafa Arif (Northern Collegiate Institute)

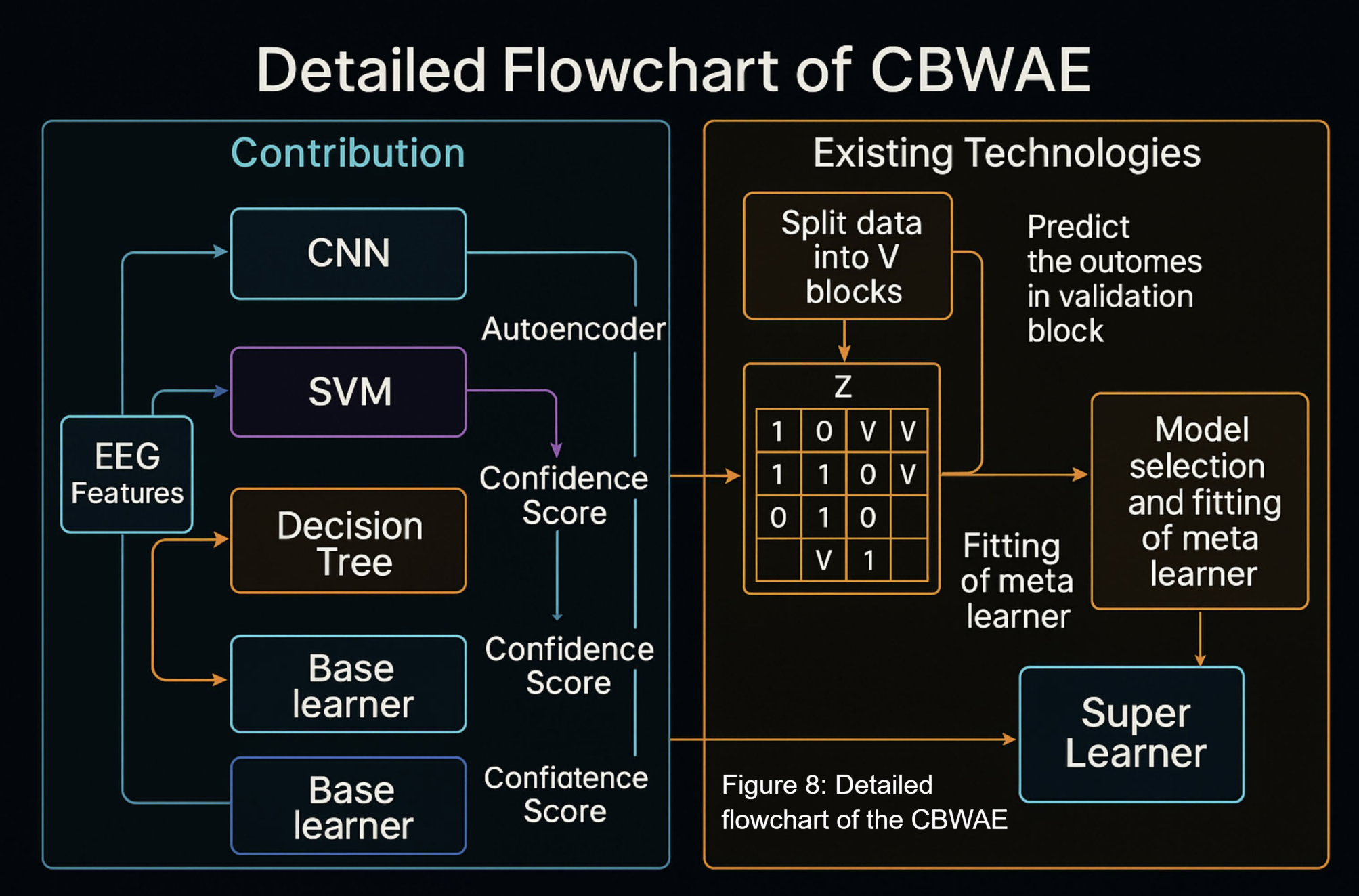

Motor impairments affect over 1.3 billion people worldwide, yet most brain-computer interfaces (BCIs) remain invasive or prohibitively expensive. This project presents CBWAE (Correlation-Based Weighted Average Ensemble), a novel AI-driven, non-invasive neurointerface that decodes motor intent in real time with 95% accuracy using an $80 custom EEG headset.

Unlike existing BCIs, CBWAE dynamically assigns models per motor class and optimizes feature-model pairs via quantum-inspired search (Grover’s & QAOA), achieving instant adaptability across users. The system enables seamless control of assistive devices—prosthetics, exoskeletons, or wheelchairs—bridging neuroscience, AI, and accessibility for a more inclusive human–machine future.

Extended AbstractPosterTeam 13: Autonomous Apple Harvesting with Immersive Human-Robot Interaction

Chris Ninatanta (Washington State University)

The apple industry is critical to Washington State's economy; however, labor shortages, reliance on costly seasonal workers, and narrow harvesting windows threaten its sustainability. Autonomous apple-harvesting robots could provide relief to farmers; however recent prototypes typically capture less than 60% of available fruit. Dense foliage, clustered apples and obstructing branches reduce performance. To address this, I propose combining human and artificial intelligence (AI) through virtual reality (VR), enabling immersive human-robot interaction. Human movements and decisions collected in orchards during harvest and within VR environments will train the AI, improving autonomy and raising harvesting efficiency above 80%.

Intro Slides

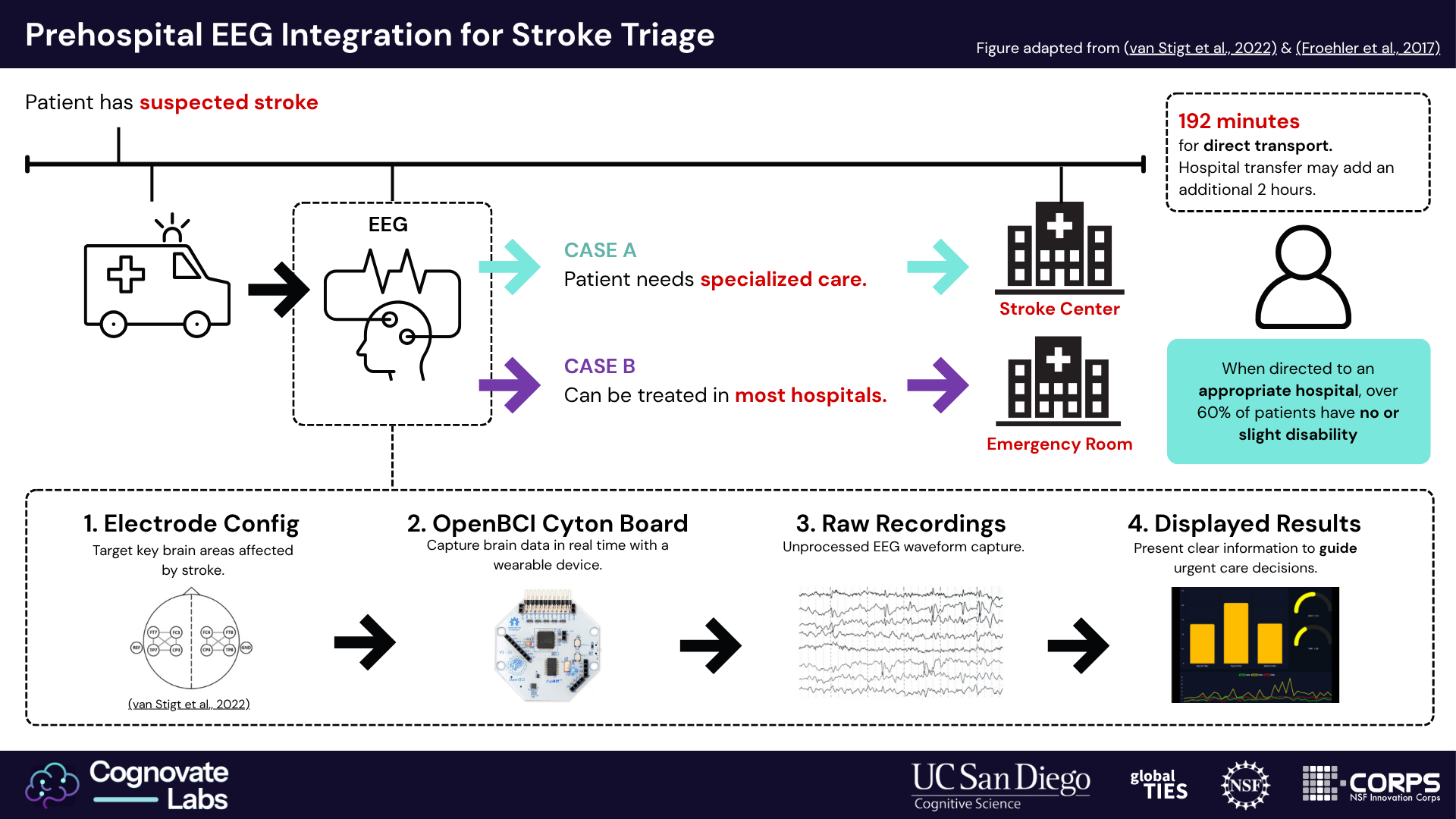

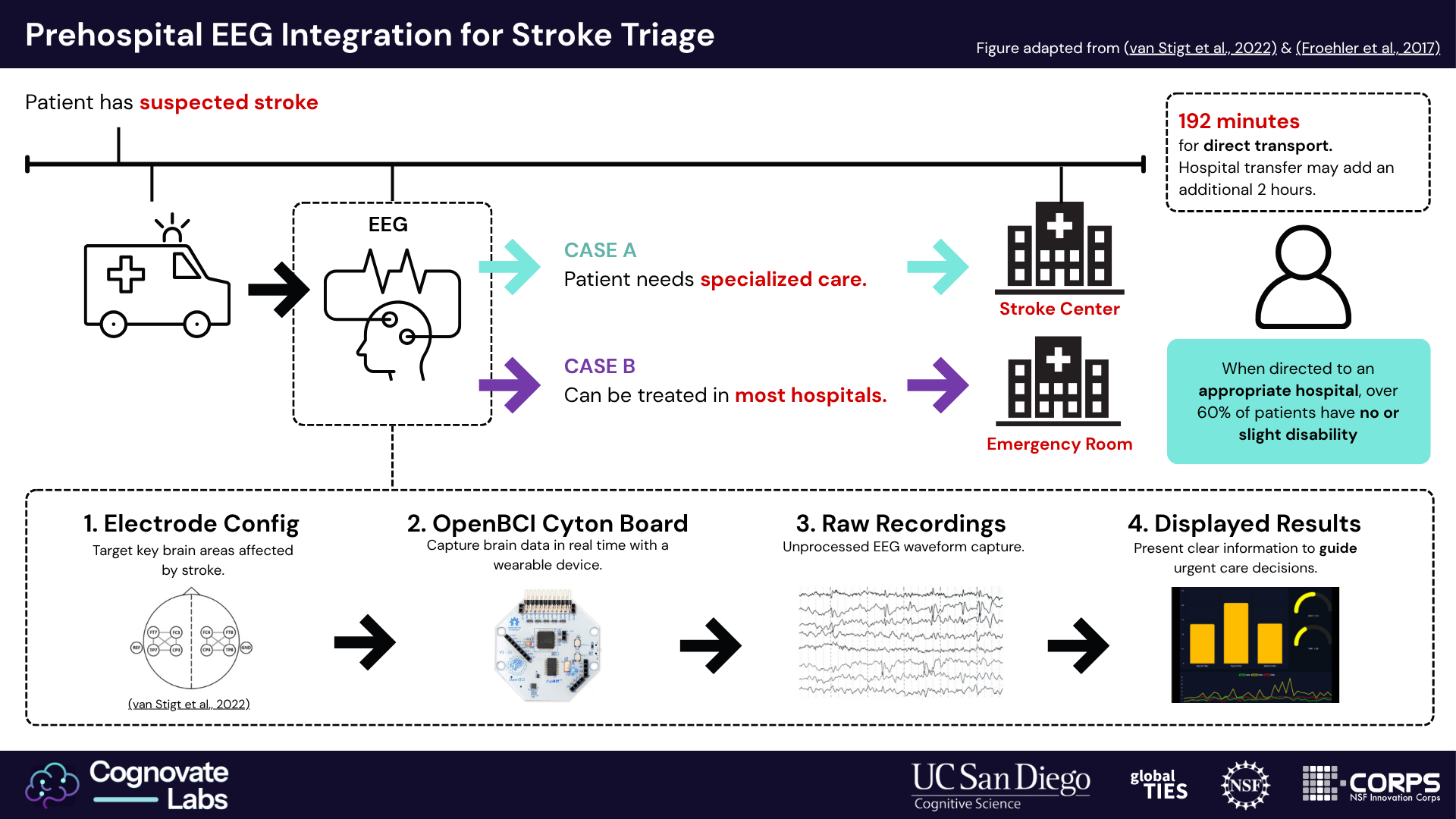

Team 14: Pre-Hospital EEG Integration for Stroke Triage

Hailey Fox (University of Southern California San Diego)

40% of strokes are Large Vessel Occlusion (LVO), which require treatment only available at specific hospitals. Every 30-minute delay before initiating treatment lowers patient outcomes by 7%. Current ambulance protocol rushes patients to the nearest hospital. Taking patients to the incorrect hospital produces a median delay of 109 minutes. Cognovate Labs is developing a pre-hospital EEG device to provide real-time cognitive insights that cannot be identified through conventional stroke assessments. Our device enables an informed decision to take patients directly to the appropriate care center, thereby increasing the number of patients who achieve functional independence after recovery.

Extended AbstractIntro SlidesShort VideoPoster

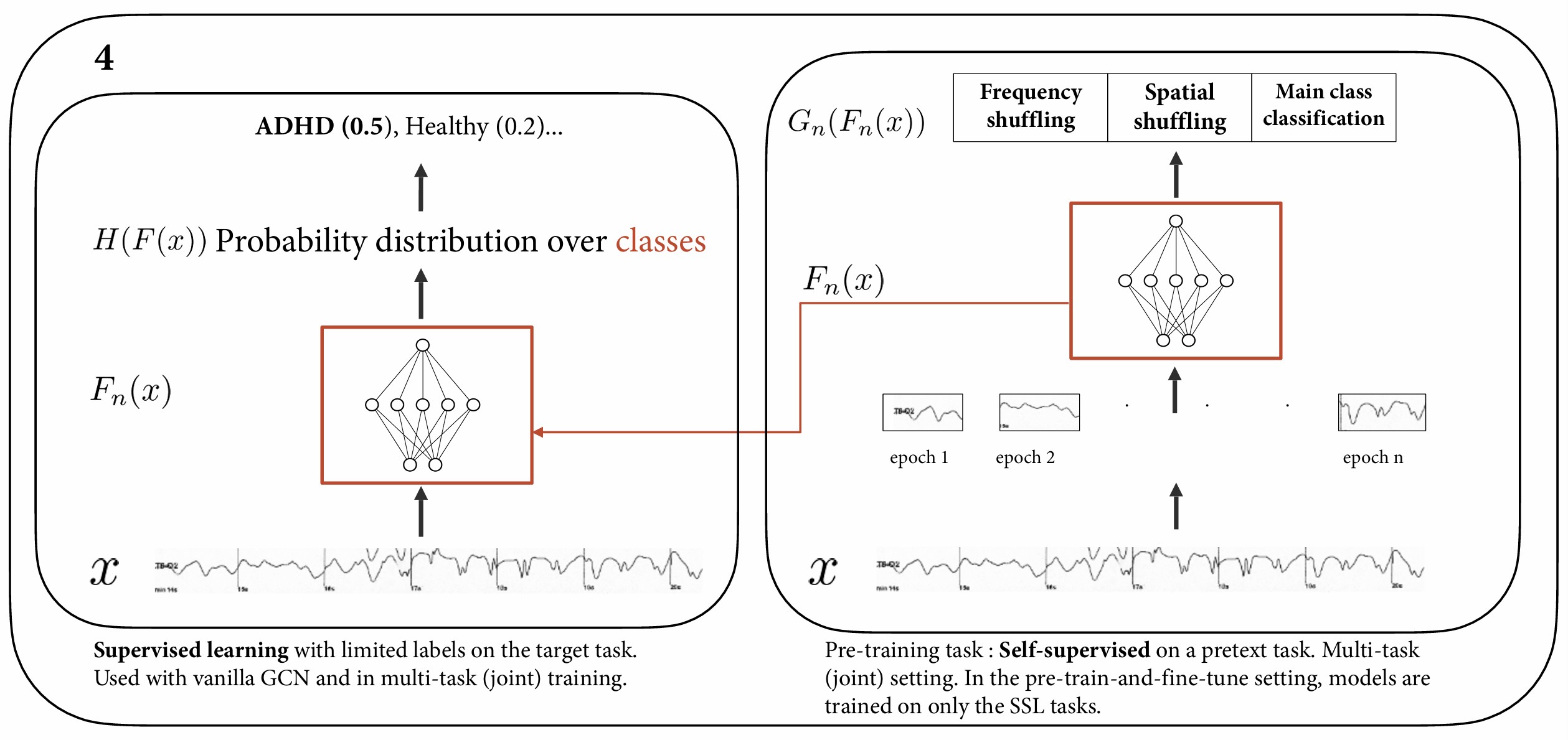

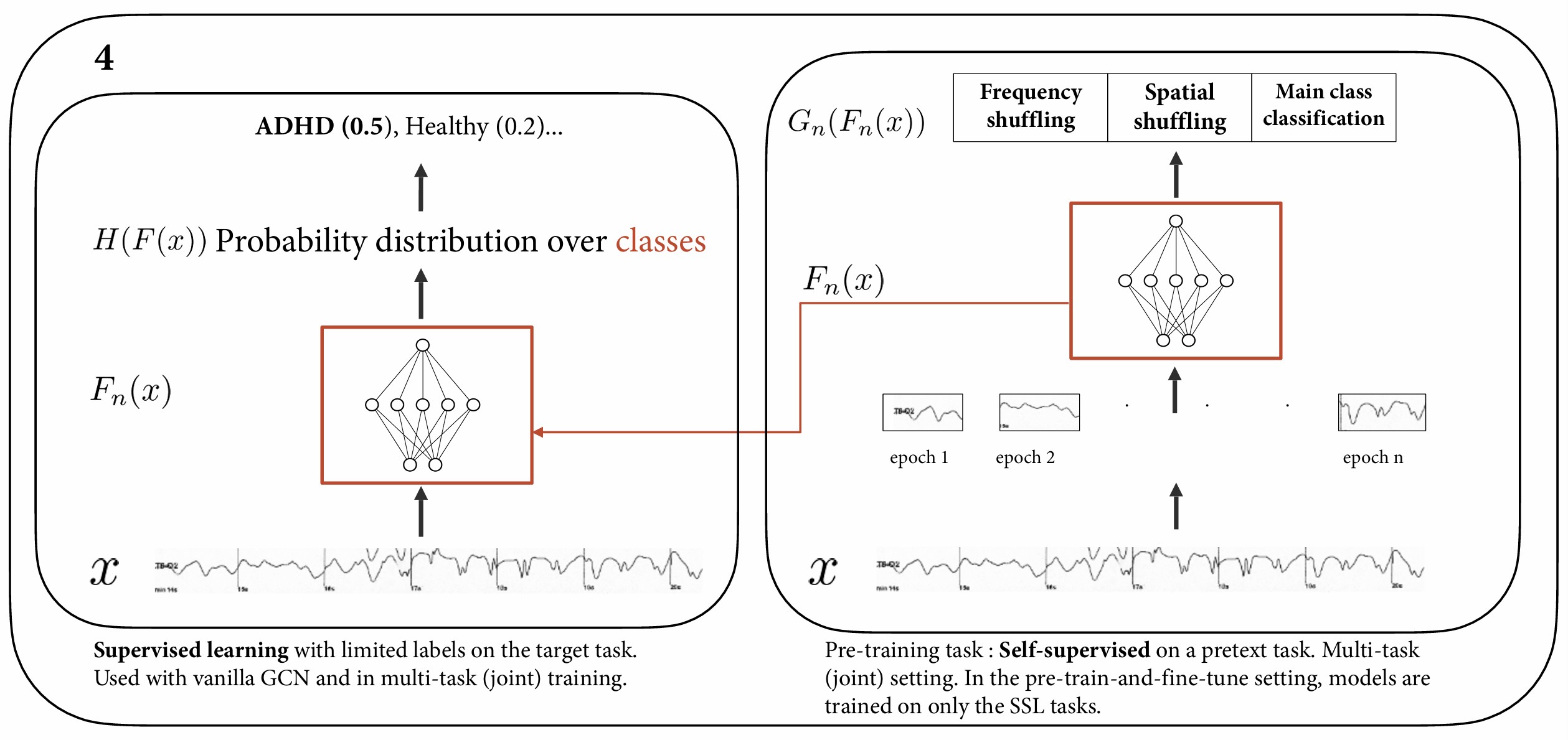

Team 15: Bring Your Own Labels: Graph-Based Self-Supervised Learning for EEG with Implications for Brain-Centered HRI

Udesh Habaraduwa (Tilburg University)

Bring Your Own Labels" explores how graph neural networks and self-supervised learning can unlock hidden patterns in EEG data for psychiatric screening. By building a reproducible, scalable preprocessing pipeline and testing novel graph-based models, this work advances the search for reliable biomarkers in mental health. Results show that graph-based approaches outperform conventional baselines, highlighting their potential to transform human-centered neurotechnology and adaptive human-robot interaction.

Extended AbstractPoster

.png)

.png)

.jpg)

.jpg)

.png)